10 more Cognitive Biases illustrated

Learn "The Art of Thinking Clearly" in a visual way!

We are now at Part 3 of the visual summary for the book 📖 'The Art of Thinking Clearly' by Rolf Dobelli.

In case you missed the two other parts (bias 1 to 20) you can find them here:

I find learning about cognitive biases so interesting!

Some of them, even when they fail logic tests, can be harmless or even useful in specific situations, but being aware of how our brains work and its tendency to fall into traps can helps us stay humble in our interaction with others, and I hope make better decision for our lives.

I’m planning to produce all together 10 posts, illustrating 10 biases each, I may make a pauses to share other book in between, so stay tuned!

If not done already, I invite you to subscribe:

As always, I’m grateful for your readership and love reading your feedback / thoughts shared in the comments, or by email reply!

Liking bias

We are more influenced by the people we know and like.

This makes us more vulnerable to advertising or unreasonable demands.

Example: the “influencer” economy

Endowment effect:

We give more value to a thing we own, than to the same thing when it is not ours.

Coincidence:

We under estimate the likelihood of the unexpected.

Example: Having too similar event happening back to back.

Groupthink:

Even a group full of smart people, can take irrational decisions.

GroupThink happens when we prioritize harmony and consensus over critical thinking.

Giving a negative opinion is hard:

- There may be fear of negative consequences (real or imagined)

- You may be swayed by the apparent confidence of a leader

- You may assume that the all group is behind the decision (everyone being also silencing their own concerns).

All of this leading to conformity and flawed decision-making.

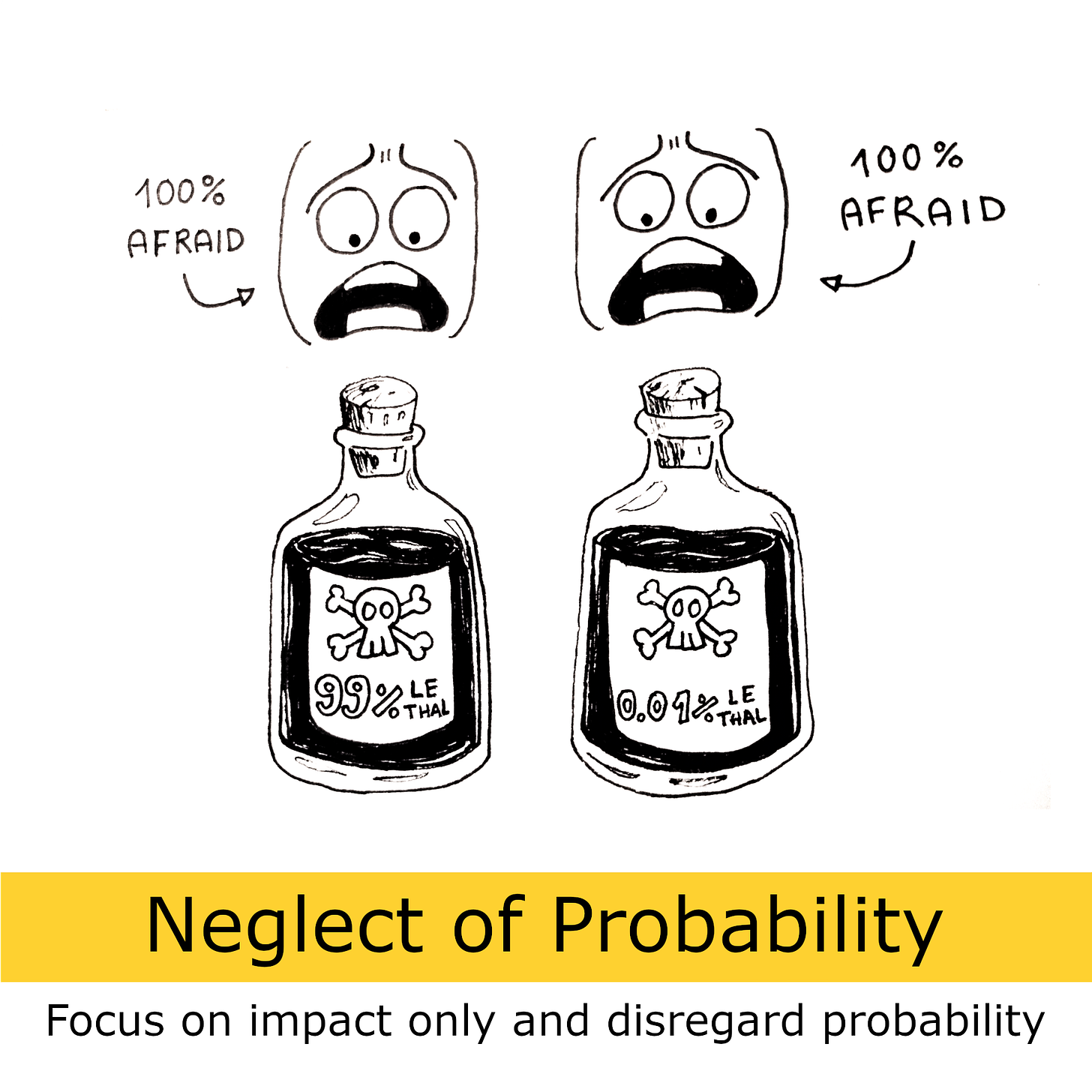

Neglect of probability:

We struggle to understand probability intuitively, often focusing more on the potential impact of an event rather than its actual chances.

Scarcity error:

When an option is taken away or scarce, we often find it more appealing.

Base-rate neglect:

We tend to ignore the overall frequency or probability of an event when making judgments or decisions. Instead, we often focus excessively on specific information or characteristics, such as individual cases or anecdotal evidence. This can lead to errors in judgment.

For example in the illustration, we may assume James to be more likely to be a professor as he likes reading, while there are much more Truck Drivers that likes to read, than literature professors.

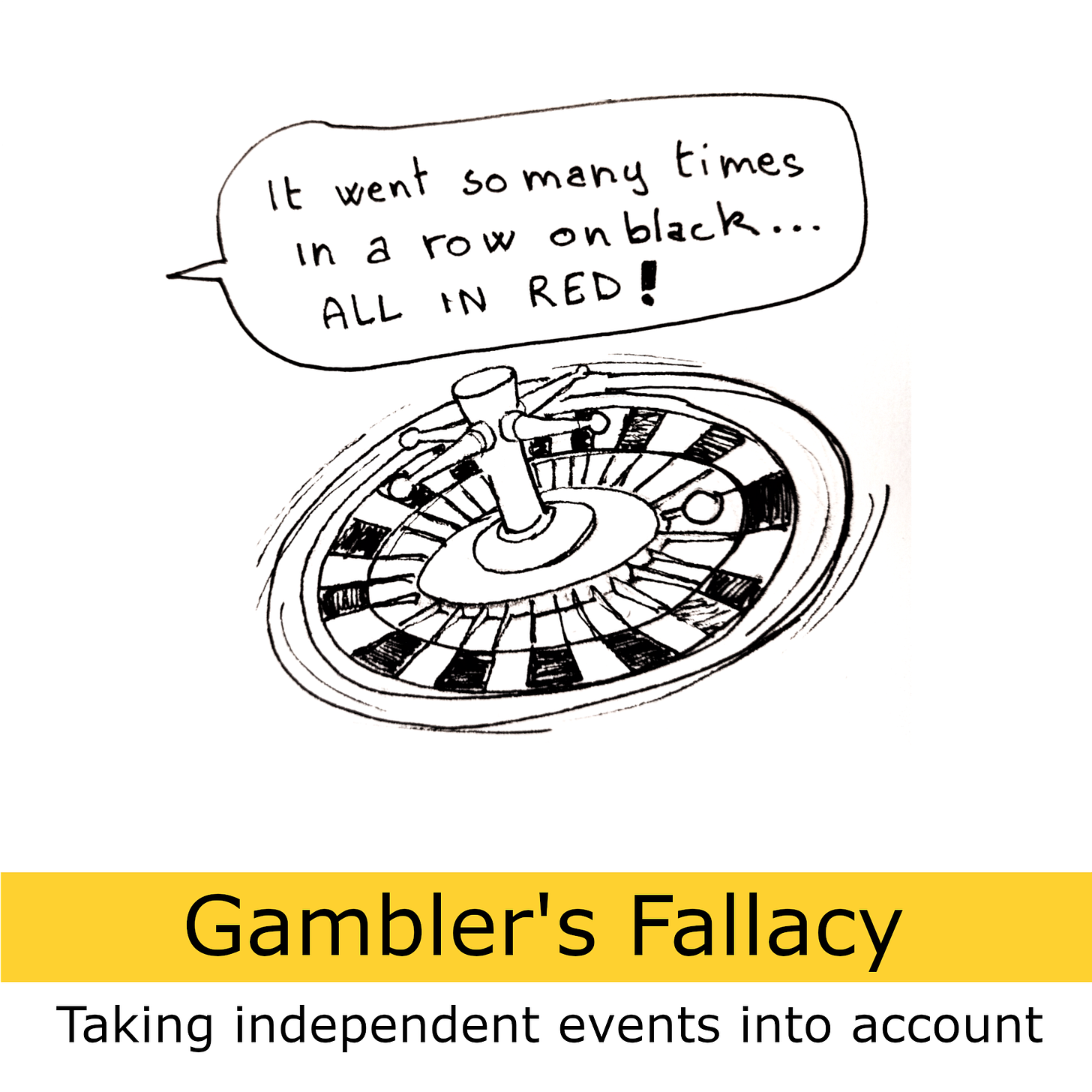

Gambler’s fallacy:

We often confuse events that are independent and dependent, like assuming that because a ball has landed on black ten times, it must be due to land on red soon.

Anchors:

Focusing heavily on the first piece of information we receive (the "anchor") when making decisions or estimates. Subsequent judgments are then adjusted from this initial anchor, often insufficiently. This can lead to errors in judgment, as the initial anchor may be arbitrary or unrelated to the decision at hand.

This bias is also a good reason for Leaders to refrain to state their opinion first, if they want to hear the true opinion of their team.

You can find more on this here:

Induction:

The inclination to generalize universal truths from individual, often past, observations. This is illustrated by the "turkey problem," where a turkey enjoys a great life until Thanksgiving.

This example came from the great book from Nassim Taleb “Antifragile”, I have illustrated it in full in a series of post:

And That’s all for this week!

If you liked it, let me know and please share it around.

The next post can be found here:

Once again, I enjoyed this so much.

Bravo Lud! I had never heard/seen the Best-rate Neglect scenario, thanks for sharing :)